Fly12 Sport

Get the most out of your Fly12 Sport

-

SAVE 30%

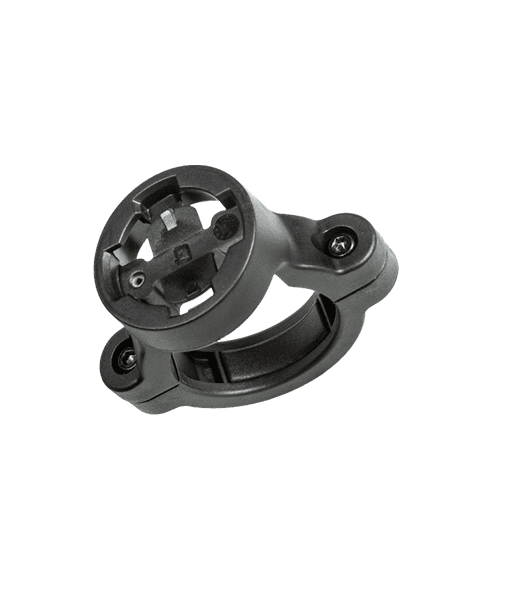

Duo Mount

USD $45.49 Add to cart -

SAVE 30%

Fly12 – Handlebar Mount

USD $16.49 Add to cart -

SAVE 30%

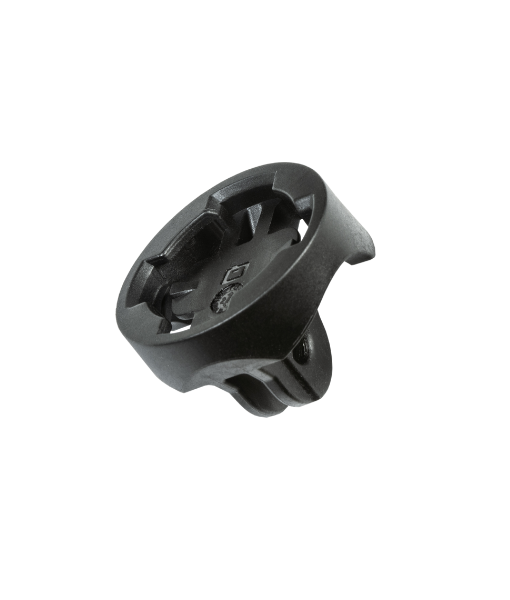

Universal Adaptor Pack

USD $11.89 Add to cart -

SAVE 30%

microSD OTG Card Reader

USD $13.99 Add to Cart -

SAVE 30%

Microphone Filters

USD $11.89 Add to cart -

SAVE 30%

Cycliq 64GB microSD Card

USD $24.49 Add to cart -

SAVE 30%

Tether Pack

USD $15.39 Add to cart -

SAVE 30%

Fly12 Sport Silicone Case

USD $13.99 Add to Cart -

SAVE 30%

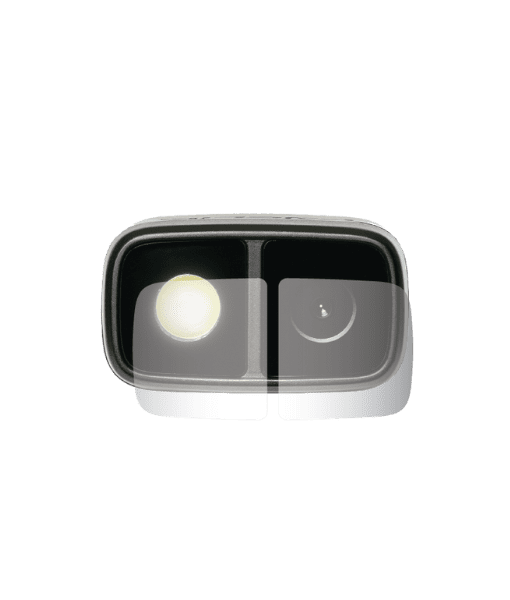

Fly12 Sport Lens Protector Pack

USD $11.89 Add to cart

Fly6 GEN 3

Fly6 GEN 3

FLY12 Sport

FLY12 Sport

Black Flyday Bundle

Black Flyday Bundle

Fly12 Sport Bundle

Fly12 Sport Bundle Fly6 GEN 3

Fly6 GEN 3 Fly12 Sport

Fly12 Sport Mounts

Mounts Cases

Cases View All Accessories

View All Accessories Jackets

Jackets